From AI Demo to Enterprise Solutions

Feb 10, 2026

The 3-Phase Workshop Pattern That Scales

This post explains how a single real-world AI prototype evolved into multiple enterprise implementations - and why the most reliable way to scope and deliver AI automation is a structured 3-phase workshop cycle with intentional gaps between sessions.

How this started: a technical PoC that was supposed to be “done”

In mid-2024, I started testing video automation with content from a tech YouTuber I knew, you also probably know her: Nana. The goal was simple: see if I could automate transcription, metadata extraction, and content analysis using AWS services.

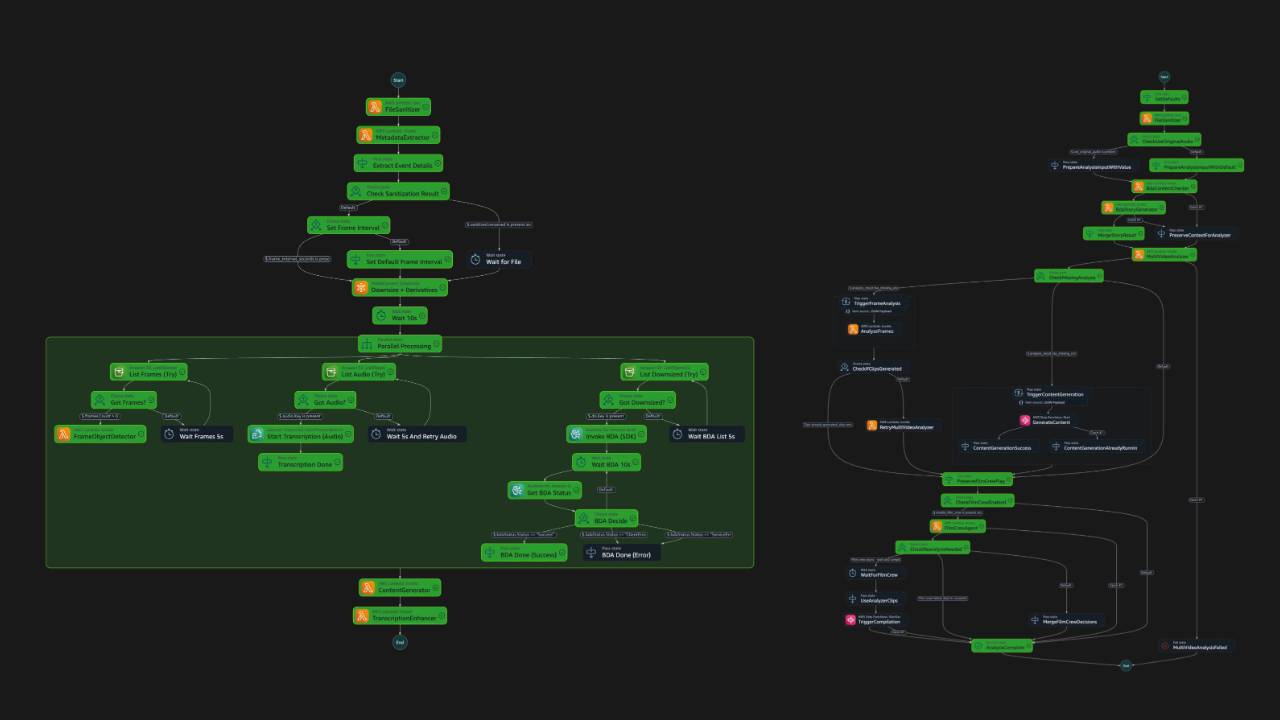

I built a pipeline:

- Transcribe for speech-to-text

- Rekognition for visual analysis

- Bedrock for intelligent enhancement

- MediaConvert for processing

- Step Functions for orchestration

Tested on 5–10 minute videos and later with 60+ minutes. It worked.

Processing time: ~2 minutes

Cost: ~€0.36 per video

That was supposed to be it. A technical proof-of-concept. Done.

Then a video production company saw the demo and asked: “Can this work for news reporters in the field?”

Different use case. Same technical foundation.

The pattern that emerged

Over the next 12 months, that one technical prototype spawned four distinct customer projects:

- Mobile news video: transcription + name tags + logo detection for reporters

- Sports automation: near real-time highlights with athlete detection and tracking

- Broadcast solutions: transcription quality uplift (80% → 95%) + multi-platform clips

- Event video: template-based automated editing for social-ready outputs

Same core pipeline. Different constraints. Different success criteria. Different operational realities.

And then I started noticing something else:

Each project progressed in a 3-phase cycle — with strategic gaps between sessions.

Why the gap matters (and why I stopped trying to compress everything)

I used to hate scheduling gaps. It felt like indecision. Like lost momentum.

But the gap is where enterprise AI projects either become real - or die later in production.

Here’s why:

- Stakeholders talk to real users instead of guessing.

- Engineers validate constraints instead of promising “we can do it.”

- Hidden dependencies surface early (data access, security boundaries, operational workflows).

- Scope becomes sharper, not broader.

In other words: the gap is where assumptions meet reality.

Example: the “news reporter” project

Workshop 1: possibility space

The mobile video company came with multiple potential directions: news field reports, event aftermovies, business videos, product showcases.

Workshop 1 was energizing. But votes were split. People were polite. Everyone kept options open.

We ended with: “Let’s think about it.” Workshop 2 was set three weeks later.

The gap: where clarity emerged

In those three weeks:

- The product owner spoke with real reporters. One said: “If this works, it changes everything about how I work in the field.”

- The developer validated constraints and concluded: if we try all use cases, we’ll do none well.

- An external partner pushed back on scope creep: field reports only, no archive integration, no multi-source aggregation.

By Workshop 2, the scope was unanimous: field reports, smartphone footage, single-user workflow, strong validation.

Workshop 2: prototype under real constraints

We tested with actual footage, real metadata requirements, and real edge cases. Not demo data. Not “ideal inputs.”

The second gap: where solutions mature

Between Workshop 2 and 3, the team tested and refined:

- validation logic

- quality targets

- cost envelopes

- failure modes and fallback behavior

Workshop 3: decision with confidence

By Workshop 3, we weren’t debating if the use case was real. We were deciding how to deploy it responsibly.

The same cycle repeated across customers

Sports automation: different “fast enough”

A founder saw the same pipeline demo and asked: “Can this generate highlights for track based races?”

New customer. New workflow. Same technical base.

- Workshop 1: define the user value (automatic smart video editing) and constraints (fixed cameras, delivery in minutes)

- Gap: capture real race footage, test multi-camera sync, validate if athletes actually care

- Workshop 2: prototype with six feeds, confirm detection + automatic editing feasibility

- Gap: iterate based on real performance

- Workshop 3: deployment and rollout plan

Broadcast: accuracy targets and enterprise integration

Same core pipeline, new reality: existing transcription at 80% was unusable; target was 97%+. Plus multi-platform format generation.

- Workshop 1: define requirements and quality thresholds

- Gap: test on real TV clips

- Workshop 2: prove uplift feasibility with measurable evaluation

- Gap: harden for production constraints

- Workshop 3: integration and deployment roadmap

The technical foundation that enabled multiple solutions

The core pipeline across customers stayed stable:

- S3 upload triggers processing

- MediaConvert downsizes, extracts audio, extracts frames

- Transcribe for speech-to-text

- Rekognition for visual analysis

- Bedrock for intelligent enhancement (summaries, structured metadata, domain rules)

- Step Functions orchestrates the workflow

- MediaConvert renders final outputs

What changed was configuration and intent:

- News: journalistic validation & responsibility boundaries

- Sports: near real-time throughput and multi-camera constraints

- Broadcast: accuracy thresholds and integration with existing workflows

- Events: template-based editing and social optimization

My workshop model (what I actually sell)

Enterprises don’t need “AI inspiration.” They need a reliable way to turn automation ideas into production decisions.

That’s why my work is structured like this:

| Stage | Purpose | Typical Outcome |

|---|---|---|

| Stage 0 – Clarity Call | Align on context, constraints, and what “success” means | Clear next step (or a conscious no) |

| Workshop 1 – Use Case Framing | Turn ideas into crisp, testable use cases | Prioritized use cases + success criteria |

| Workshop 2 – Feasibility & Prototype | Test with real constraints and real data | Validated feasibility + working prototype slice |

| Workshop 3 – Decision & Roadmap | Make a confident go/no-go decision and plan | Deployment plan, cost model, ownership, next steps |

The gaps are not delays. They’re where organizations do the work that makes AI projects survive reality.

If you’re building real AI solutions (classical ML, generative AI, or hybrid) and want a structured path from idea → feasibility → production decision, this is exactly what I support.